Inspired by the brain: Networks of nanoparticles can compute like 'spiking' neurons - Annual Report 2023

12 April, 2024

The human brain is likely the most energy efficient computing system to have ever existed. Despite the astonishing depth, breadth and complexity of tasks it routinely carries out, it does so on just 12 watts of power, all sourced from the food we eat. By comparison, a typical laptop draws around 60 watts of electricity, and a desktop PC needs closer to 175 watts1 and data centres use many times more. With big data and artificial intelligence placing huge energy demands on today's computers, it's no wonder that those seeking to push the boundaries of computing are finding inspiration in neuroscience. This includes MacDiarmid Institute scientists at Te Whare Wānanga o Waitaha University of Canterbury, who are developing novel neuromorphic devices based on nanoparticle networks. Their latest advance - enabled by the intrinsic neuron-like spiking from their devices - demonstrates that they can perform a range of complex tasks, including image recognition.

Much of the brain's unrivalled computational ability derives from its structure and the way in which it processes information. The brain consists of dense, interconnected networks of elementary units called neurons, which generate spikes of electrical signals that transmit information via their frequency and timing. Neuronal networks seem to operate at what's known as a 'critical point', where their behaviour is poised between stability and instability. It's believed that this criticality is what gives the brain its computational superpowers.

Networks of nanoparticles

Schematic of a percolating network of nanoparticles

To emulate the behaviour, Professor Simon Brown - who leads our Future Computing Research Programme - looked to self-organised networks of metallic nanoparticles. Back in 20132, his team showed that when you apply a voltage to these networks, atomic-scale filaments form between the nanoparticles, producing electrical spikes similar to those seen in the brain. The work has continued apace ever since. In a new paper published in Nano Letters, the team have demonstrated a breakthrough - the spiking behaviour from Percolating Networks of Nanoparticles (PNNs) can even be used to classify handwritten numbers.

Computers have completely changed the world. To enable further progress, especially around artificial intelligence, we need to either be thinking about different algorithms or different hardware, or both.

Sofie Studholme MacDiarmid Institute PhD student

The lead author on the paper is PhD student Sofie Studholme, who has been relishing the challenges of this area of research. Following completion of her undergraduate electrical engineering degree at Te Whare Wānanga o Waitaha University of Canterbury, Sofie considered her next steps: "I like really complex problems; the ones that have you waking up in the middle of the night because you might have a solution." Her final-year project supervisor, Emeritus Professor Phil Bones, told Sofie about a PhD opportunity at the MacDiarmid Institute, focused on neuromorphic computing. "As soon as I heard about the research, I decided to apply. I started the PhD in July 2022."

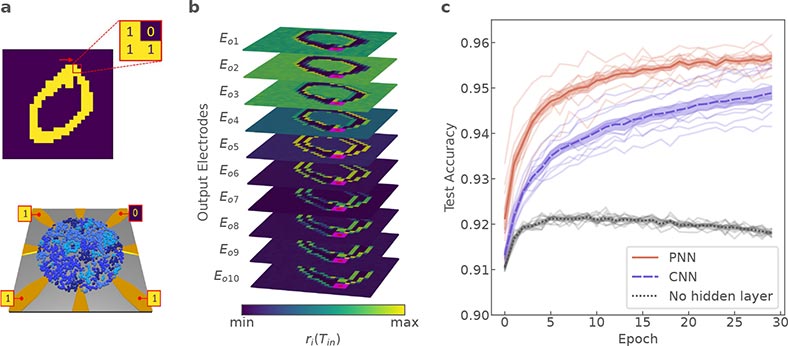

For this, her inaugural first-author paper, Sofie set out to investigate the types of computing that a PNN could do. She started by testing it with standard logic operations (AND, OR, XOR, etc.) which are widely used to establish the computational capability of any network. The XOR task in particular is challenging, because it requires the network to perform a non-linear transformation. "The simulations showed that with optimal parameters, our system can compute all possible logical operations on a 2-bit input with 99% accuracy," she says.

Next, Sofie set a more difficult challenge for the PNN - to successfully complete the Modified National Institute of Standards and Technology (MNIST) handwritten digit classification task. She describes this as "...kind of like the 'hello world' of machine learning." The MNIST database consists of 70,000 greyscale images of handwritten numbers3. While our brains are expert at classifying images and patterns like these, traditional computers find them far more challenging. So, Sofie was thrilled to find that the neuromorphic PNN "achieve(d) a test accuracy of up to 96.2%, comparable to the best previous results from in-materia systems."

Her PhD supervisor, and paper co-author, Simon, says, "Sofie's done incredibly well to get this research done in such a short space of time. And the resulting paper received remarkably positive feedback from reviewers - I think the best I've ever seen."

The next stage will be to implement Sofie's results on the latest PNN devices fabricated by her colleagues in the lab at Canterbury. Alongside that, she'll be extending her simulation-based work, to investigate the computing potential of PNNs operating together. The goal, she says is to "...develop computational schemes that can more fully exploit these critical spikes for information processing."

Schematic of a percolating network of nanoparticles

Training AI tools like ChatGPT consumes enormous, unsustainable amounts of electricity. So finding alternative computing approaches - ones that can do the same computation with less power - is an absolute must.

Professor Simon Brown MacDiarmid Institute Principal Investigator

Within the wider research network of the Institute

Sofie's work is just one of a diverse range of projects in the MacDiarmid Institute's Future Computing Research Programme. Alongside neuromorphic architectures, our scientists are also investigating novel devices that utilise superconductivity, topological insulators, and biological cells; all in an effort to cut the energy consumption of computers.

"Computers have completely changed the world," explains Sofie. "But transistor-based hardware is reaching fundamental limitations. To enable further progress, especially around artificial intelligence, we need to either be thinking about different algorithms or different hardware, or both."

Simon continues, "Training AI tools like ChatGPT consumes enormous, unsustainable amounts of electricity. So, finding alternative computing approaches - ones that can do the same computation with less power - is an absolute must."

Publication: Sofie J. Studholme, Zachary E. Heywood, Joshua B. Mallinson, Jamie K. Steel, Philip J. Bones, Matthew D. Arnold, and Simon A. Brown, "Computation via neuron-like spiking in percolating networks of nanoparticles," Nano Lett. 23, 10594 (2023).

References

- Princeton University Press - Is the human brain a biological computer?

- Physical Review Letters - Quantized Conductance and Switching in Percolating Nanoparticle Films

- mxnet - Handwritten Digit Recognition